|

I am Ahmad Sajedi, currently a Machine Learning Engineer II at Instacart. Before this role, I completed my Ph.D. at the University of Toronto, where I was fortunate to be advised by Prof. Konstantinos N. Platanioits and Prof. Yuri A. Lawryshyn. Prior to joining UofT, I received my M.Sc. degree from the Electrical and Computer Engineering Department at the University of Waterloo, mentored by Prof. En-Hui Yang. My research primarily focuses on Efficient Learning, Computer Vision, and Visual Multilabel Representations. I am open to collaboration and available to address any inquiries regarding my research. Please do not hesitate to reach out to me via email.

Email: sajedi [dot] ah [at] gmail [dot] com |

|

News |

|

• [Feb. 2025]: Congrats to my coauthors for their paper accepted to Pattern Recognition Elsevier. • [Oct. 2024]: Started at Instacart 🥕 as a Machine Learning Engineer II. • [Sep. 2024]: Successfully passed my final Ph.D. oral examination and officially awarded my Ph.D. degree. • [Jul. 2024]: Data-to-Model Distillation paper has been accepted by ECCV 2024. Paper, code, and webpage coming soon. • [May. 2024]: Passed my Ph.D. Departmental Oral Examination. My thesis is nominated for an award! • [May. 2024]: My invention disclosures for two patents, DataDAM and D2M, have been accepted as complete. • [Apr. 2024]: ATOM has been accepted by CVPR-DD 2024. • [Apr. 2024]: The First Dataset Distillation Challenge has been accepted by ECCV 2024. Serving as a Primary Chair. • [Apr. 2024]: Give a talk at Royal Bank of Canada (RBC), invited by Prof. Yuri A. Lawryshyn. Slides coming soon. • [Dec. 2023]: ProbMCL has been accepted by ICASSP 2024. • [Jul. 2023]: DataDAM has been accepted by ICCV 2023. • [May. 2023]: Give a talk at Royal Bank of Canada (RBC), invited by Prof. Yuri A. Lawryshyn. Slides coming soon. • [Feb. 2023]: A New Probabilistic Distance has been accepted by ICASSP 2023. • [May. 2022]: SKD has been accepted by IVMSP 2022. • [Dec. 2021]: Passed my Ph.D. Thesis Proposal defense! | |

Publications & Manuscripts |

|

FedPnP: Personalized Graph-Structured Federated Learning

Arash Rasti-Meymandi Ahmad Sajedi, Konstantinos N. Plataniotis Pattern Recognition Elsevier, 2025 Paper We introduce a novel personalized federated learning algorithm that leverages the inherent graph-based relationships among clients. |

|

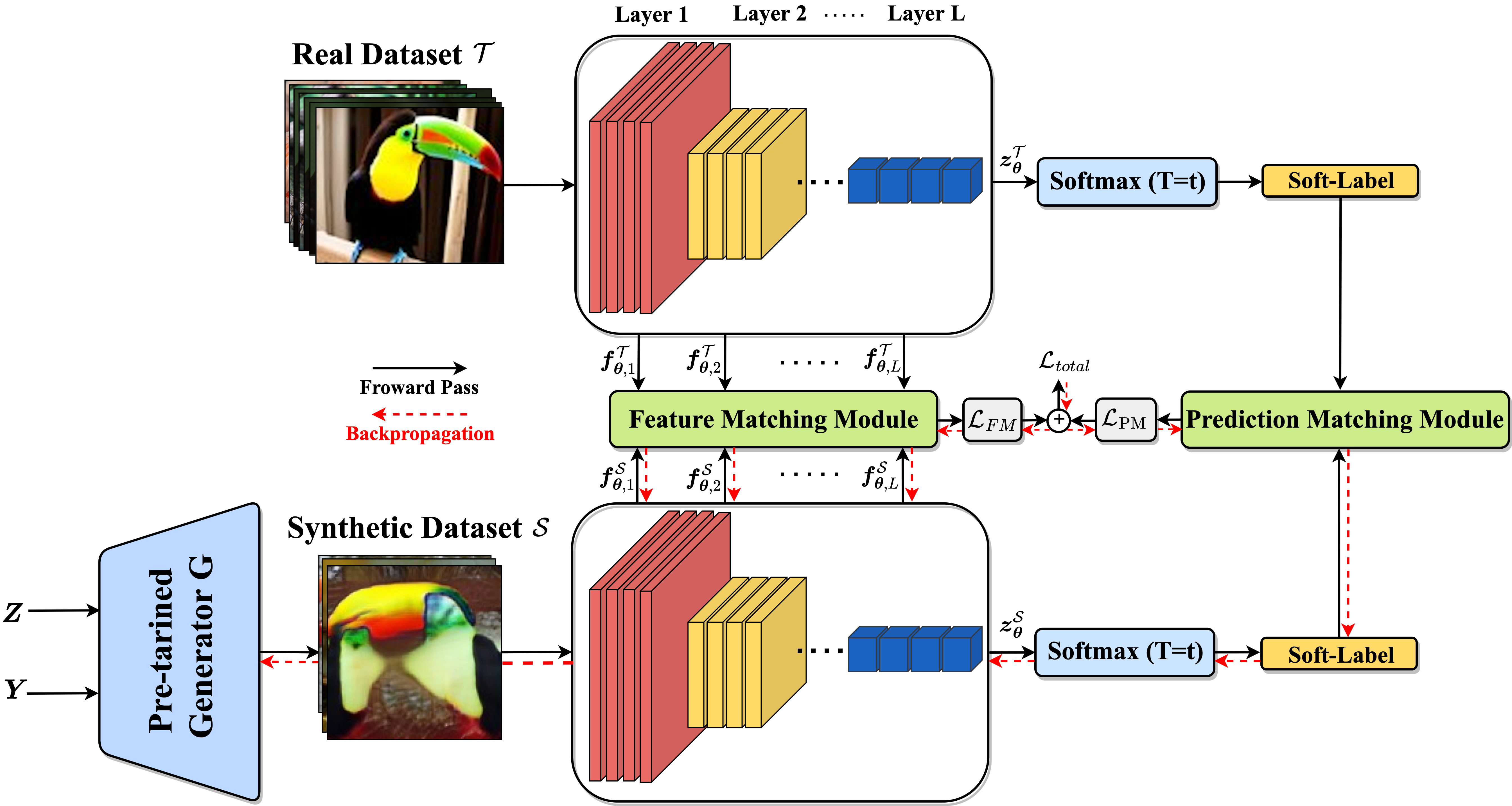

Data-to-Model Distillation: Data-Efficient Learning Framework

Ahmad Sajedi, Samir Khaki, Lucy Z. Liu, Ehsan Amjadian, Yuri A. Lawryshyn, Konstantinos N. Plataniotis ECCV, 2024 Paper We present D2M, which embeds knowledge into a generative model, allowing for efficient and scalable training across various distillation ratios and architectures. |

|

ATOM: Attention Mixer for Efficient Dataset Distillation

Samir Khaki, Ahmad Sajedi, Kai Wang, Lucy Z. Liu, Yuri A. Lawryshyn, Konstantinos N. Plataniotis CVPR-DD, 2024 Paper | Code (Coming Soon) We introduce the ATOM module to leverage contextual and localization information from the channel and spatial attention mechanisms to improve dataset distillation efficiency. |

|

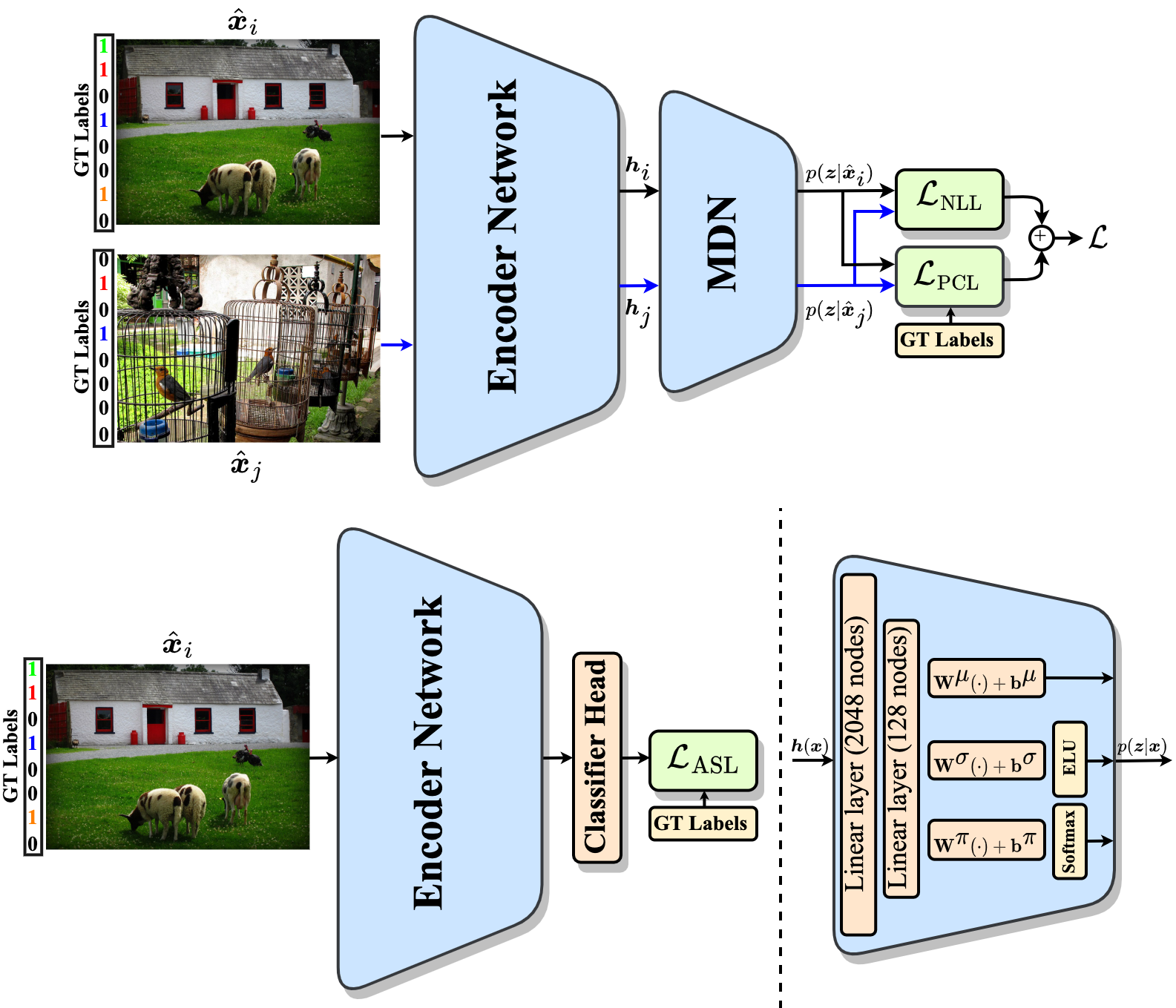

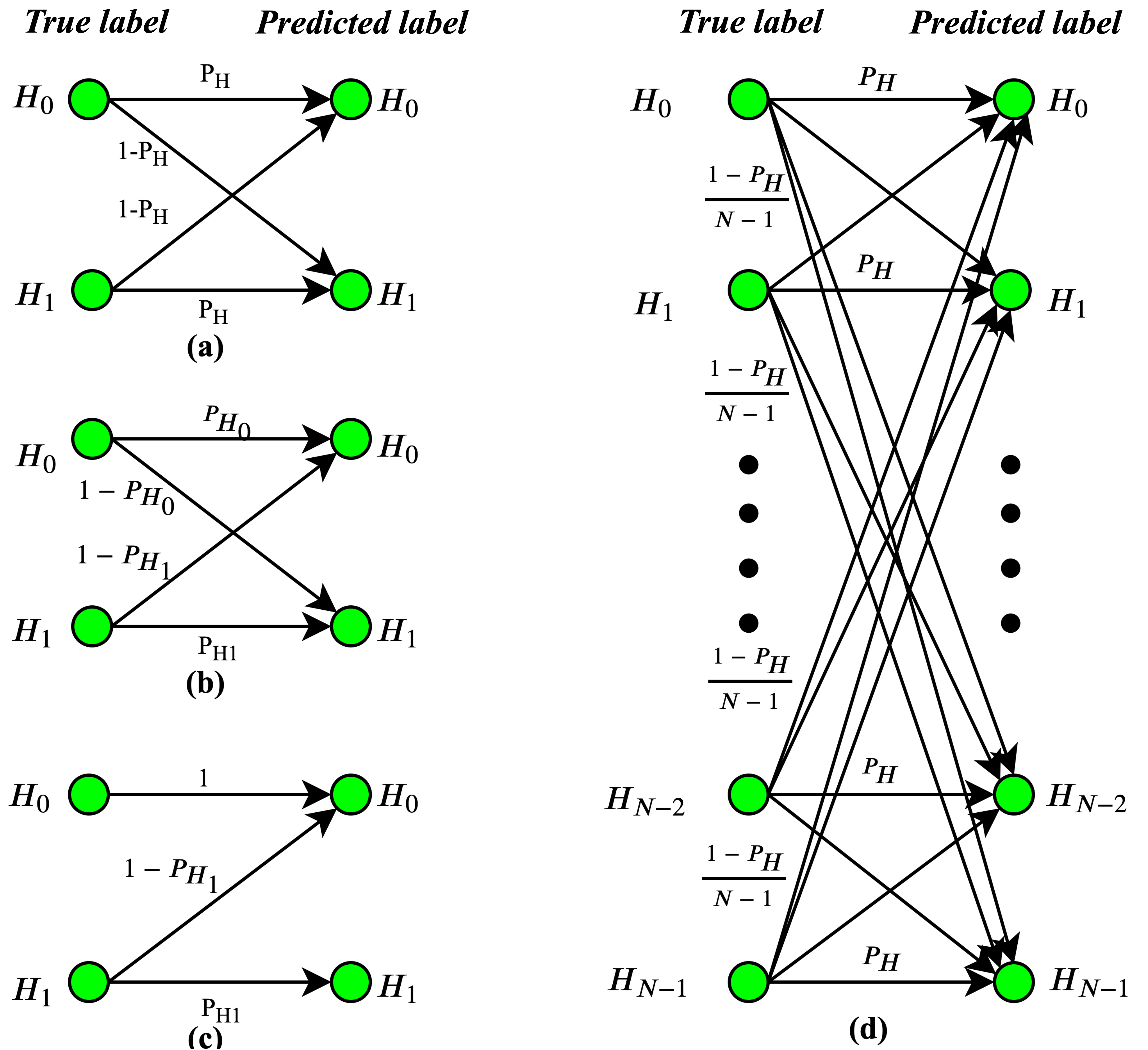

ProbMCL: Simple Probabilistic Contrastive Learning For Multi-label Visual Classification

Ahmad Sajedi, Samir Khaki, Yuri A. Lawryshyn, Konstantinos N. Plataniotis ICASSP, 2024 Website | Paper We propose a simple yet effective probabilistic contrastive learning framework for multi-label image classification tasks using Gaussian mixture models. |

|

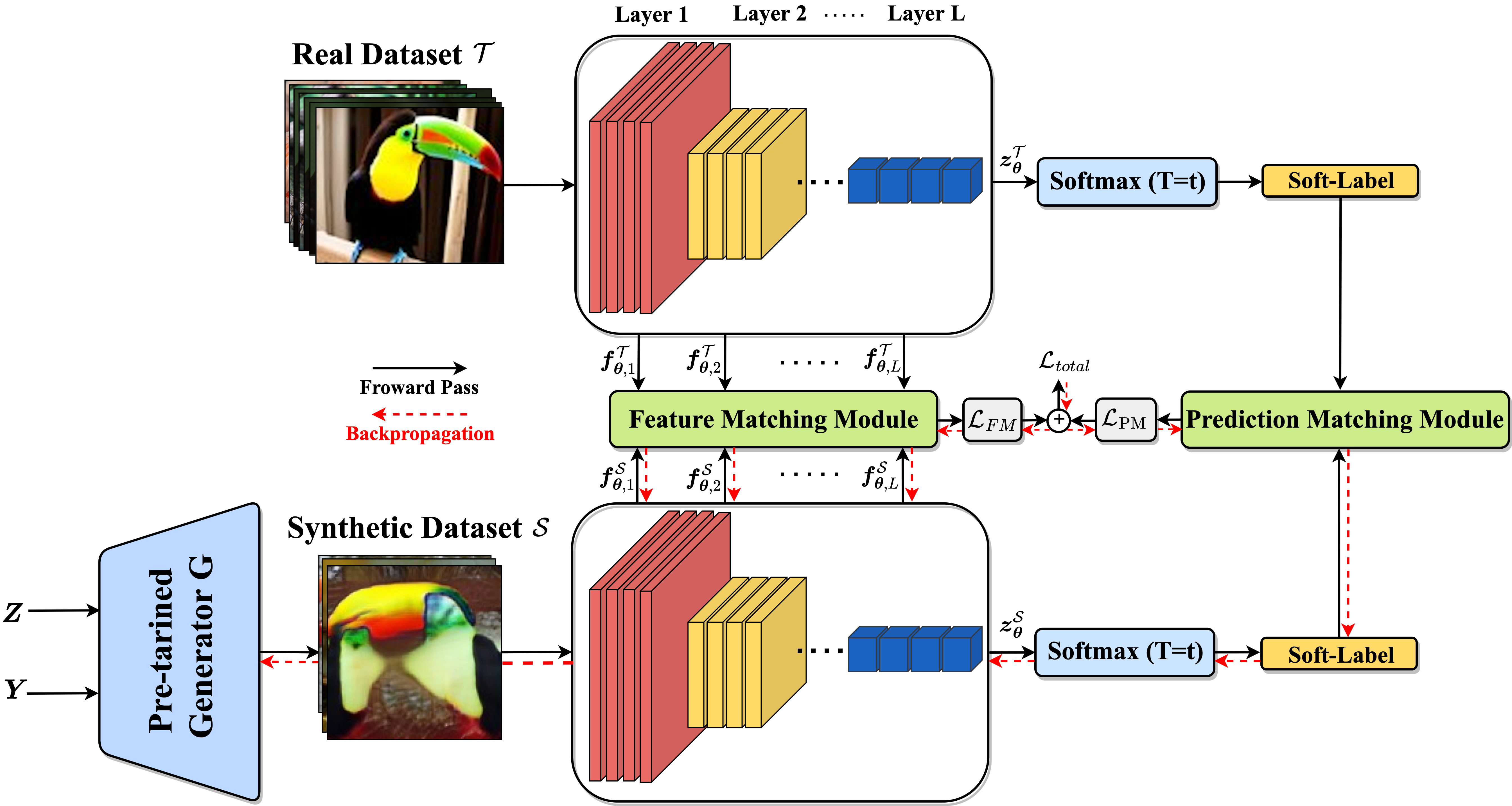

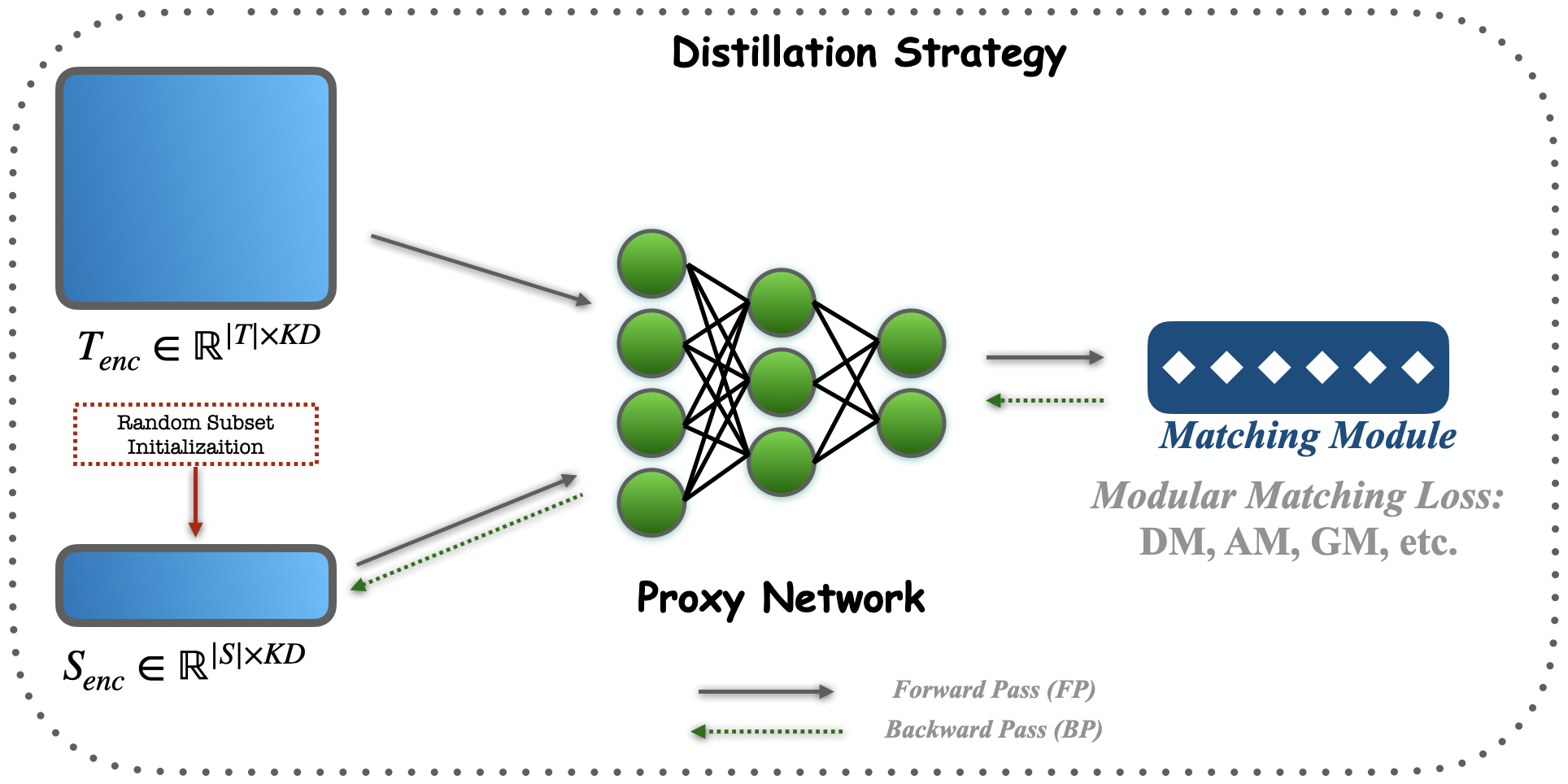

DataDAM: Efficient Dataset Distillation with Attention Matching

Ahmad Sajedi, Samir Khaki, Ehsan Amjadian, Lucy Z. Liu, Yuri A. Lawryshyn, Konstantinos N. Plataniotis ICCV, 2023 Website | Paper | Code We present an effective learning framework to distill informative knowledge from a large-scale training dataset into a small, synthetic one. |

|

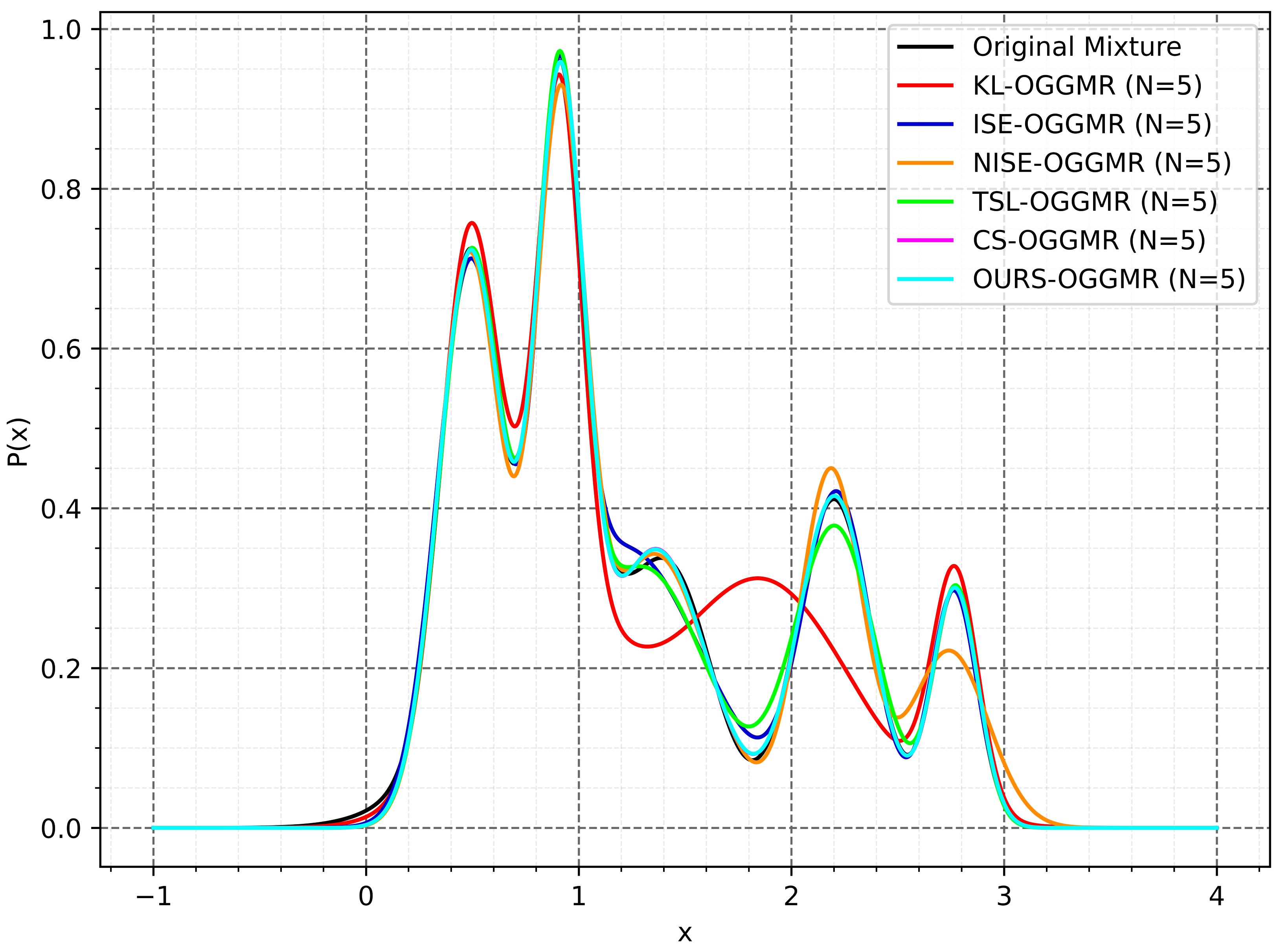

A New Probabilistic Distance Metric With Application In Gaussian Mixture Reduction

Ahmad Sajedi, Yuri A. Lawryshyn, Konstantinos N. Plataniotis ICASSP, 2023 Paper We propose a new probabilistic distance metric to compare two continuous probability density functions. This metric provides a closed-form expression for Gaussian Mixture Models. |

|

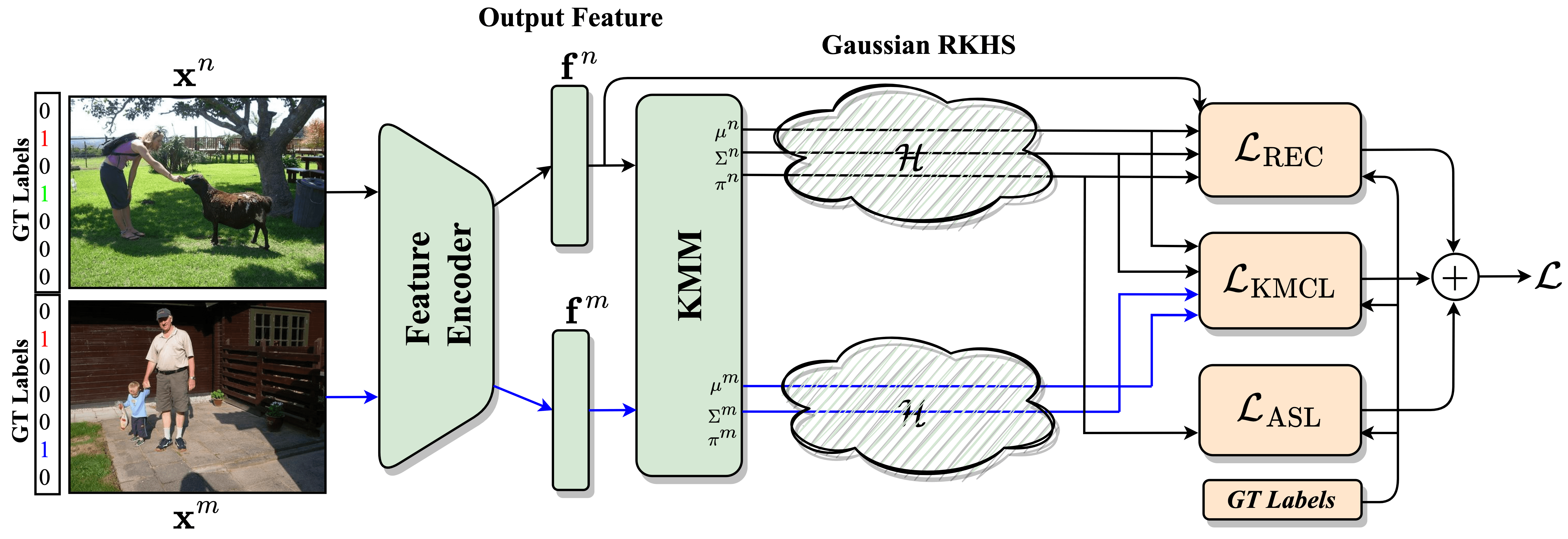

End-to-End Supervised Multilabel Contrastive Learning

Ahmad Sajedi, Samir Khaki, Konstantinos N. Plataniotis, Mahdi S. Hosseini arXiv, 2023 Paper | Code We present an end-to-end kernel-based contrastive learning framework designed for multilabel datasets in computer vision and medical imaging. |

|

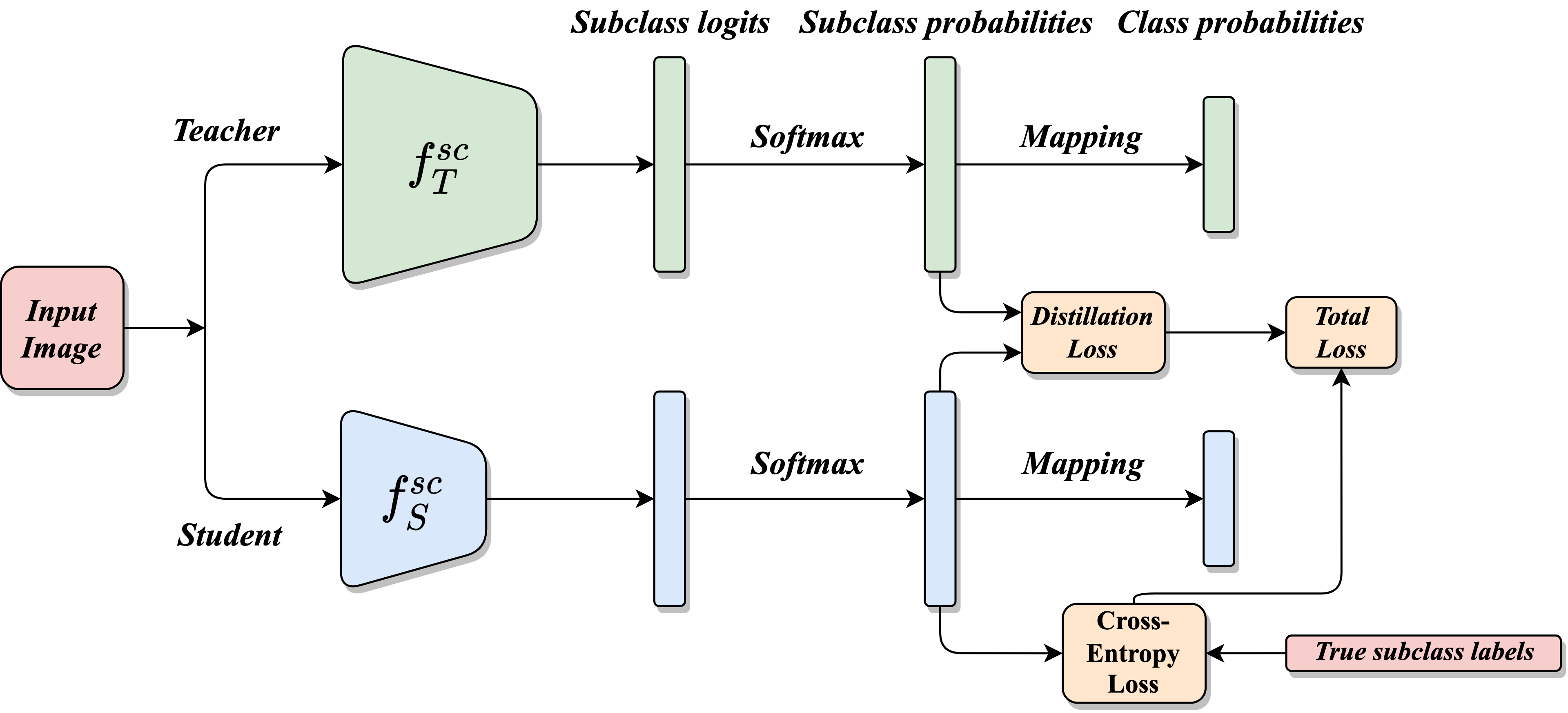

Subclass Knowledge Distillation with Known Subclass Labels

Ahmad Sajedi, Yuri A. Lawryshyn, Konstantinos N. Plataniotis IVMSP, 2022 Paper We present subclass knowledge distillation, a method of transferring predicted subclass knowledge from a teacher to a smaller student model, designed for clinical applications. |

|

On the Efficiency of Subclass Knowledge Distillation in Classification Tasks

Ahmad Sajedi, Konstantinos N. Plataniotis arXiv, 2022 Paper We introduce a novel model distillation algorithm that leverages subclass labels' knowledge and quantifies the information the teacher can provide to the student through our framework. |

|

High-Performance Convolution Using Sparsity and Patterns for Inference in Deep Convolutional Neural Networks

Hossam Amer, Ahmed H. Salamah, Ahmad Sajedi, En-hui Yang arXiv, 2021 Paper | Code By leveraging feature map sparsity, we introduce two novel convolution algorithms aiming to reduce memory usage, enhance inference speed, and maintain accuracy simultaneously. |

Patents |

|

Efficient Dataset Distillation with Attention Matching

US Patent Ahmad Sajedi, Ehsan Amjadian, Samir Khaki, Lucy Z. Liu, Yuri A. Lawryshyn, Konstantinos N. Plataniotis |

|

Data-to-Model Distillation

US Patent Ahmad Sajedi, Ehsan Amjadian, Samir Khaki, Lucy Z. Liu, Yuri A. Lawryshyn, Konstantinos N. Plataniotis |

|

Tabular Dataset Condensation

US Patent Pending Samir Khaki, Ahmad Sajedi, Lucy Z. Liu, Yuri A. Lawryshyn, Konstantinos N. Plataniotis |

Education |

|

University of Toronto, Toronto, Canada Ph.D. in Electrical and Computer Engineering • Sept. 2020 to Sept. 2024 |

|

|

University of Waterloo, Waterloo, Canada M.Sc. in Electrical and Computer Engineering • Sept. 2018 to Aug. 2020 |

|

|

Amirkabir University of Technology, Tehran, Iran B.Sc. in Electrical and Computer Engineering • Sept. 2014 to Aug. 2018 |

|

Experiences |

|

Instacart Machine Learning Engineer II • Oct. 2024 to Present Director: Shishir Kumar Prasad |

|

|

Royal Bank of Canada (RBC) Machine Learning Researcher • Jan. 2022 to Sep.2024 Mentor: Dr. Lucy Z. Liu and Dr. Ehsan Amjadian |

|

|

Centre for Management of Technology & Entrepreneurship (CMTE), University of Toronto Graduate Research Associate • Sept. 2021 to Sept. 2024 Advisor: Prof. Yuri A. Lawryshyn |

|

|

Multimedia Lab (Bell), University of Toronto Graduate Research Associate • Sept. 2020 to Sept. 2024 Advisor: Prof. Konstantinos N. Plataniotis |

|

|

Multimedia Communications Lab (Leitch), University of Waterloo Graduate Research Assistant • Sept. 2018 to Aug. 2020 Advisor: Prof. En-Hui Yang |

|

Professional Academic Activities |

|

Primary Chair: • The First Dataset Distillation Challenge Workshop at ECCV 2024 Reviewer: • ICLR 2025 • NeurIPS 2024 • ECCV 2024 • ICASSP 2024

| |

Selected Teaching |

|

Teaching Assistant • ECE1512, Digital Image Processing and Applications • Fall 2023/2022, Winter 2022 Teaching Assistant • MIE1626, Data Science Methods and Statistical Learning • Fall 2023/2022, Winter 2024/2022 Teaching Assistant • ECE602, Convex Optimization • Summer 2021, Winter 2020 Teaching Assistant • ECE302, Probability and Applications • Fall 2023/2022/2021/2020, Winter 2024/2022 Teaching Assistant • ECE286, Probability and Statistics • Winter 2024/2023/2022/2021 Teaching Assistant • STA237, Probability, Statistics, and Data Analysis • Fall 2023/2021

| |

|

Source code from Jon Barron's lovely website. |