Multi-label image classification presents a challenging task in many domains, including computer vision and medical imaging. Recent advancements have introduced graph-based and transformer-based methods to improve performance and capture label dependencies. However, these methods often include complex modules that entail heavy computation and lack interpretability.

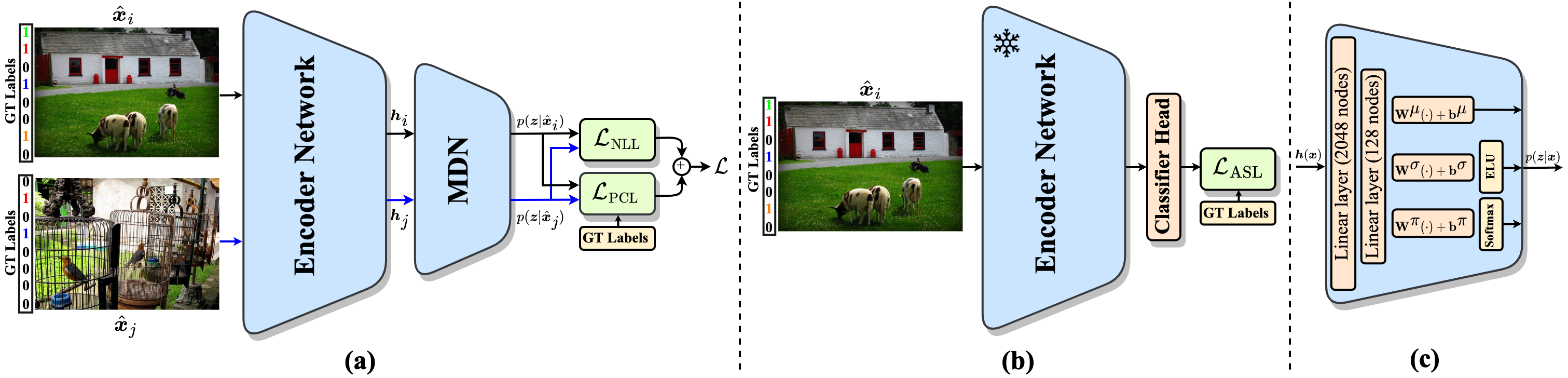

In this paper, we propose Probabilistic Multi-label Contrastive Learning (ProbMCL), a novel framework to address these challenges in multi-label image classification tasks. Our simple yet effective approach employs supervised contrastive learning, in which samples that share enough labels with an anchor image based on a decision threshold are introduced as a positive set. This structure captures label dependencies by pulling positive pair embeddings together and pushing away negative samples that fall below the threshold. We enhance representation learning by incorporating a mixture density network into contrastive learning and generating Gaussian mixture distributions to explore the epistemic uncertainty of the feature encoder.

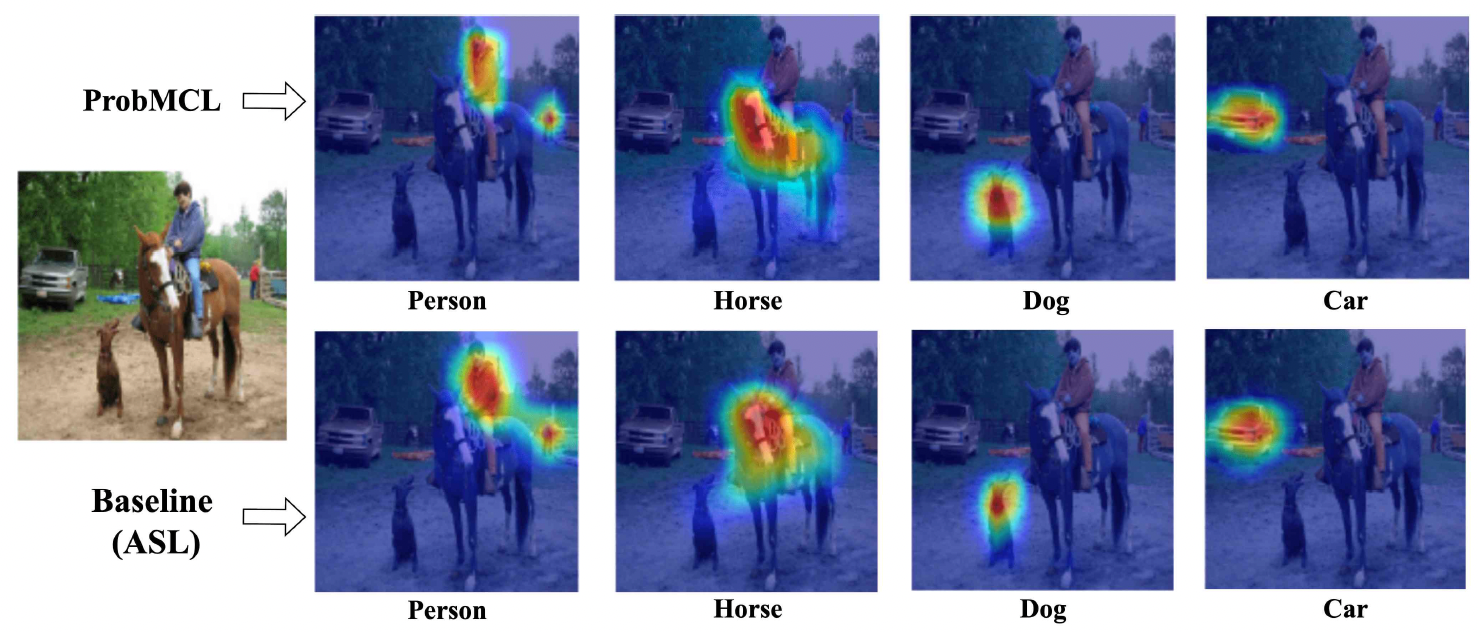

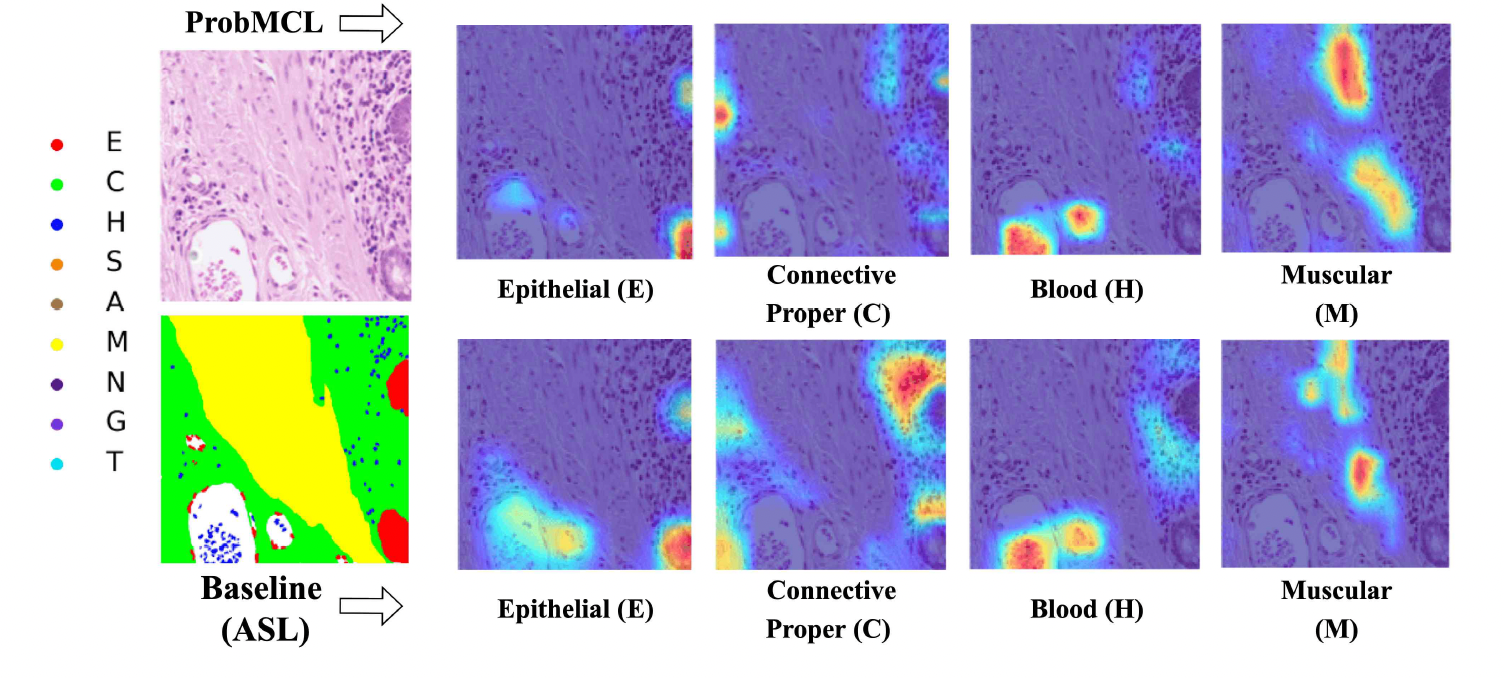

We validate the effectiveness of our framework through experimentation with datasets from the computer vision and medical imaging domains. Our method outperforms the existing state-of-the-art methods while achieving a low computational footprint on both datasets. Visualization analyses also demonstrate that ProbMCL-learned classifiers maintain a meaningful semantic topology.

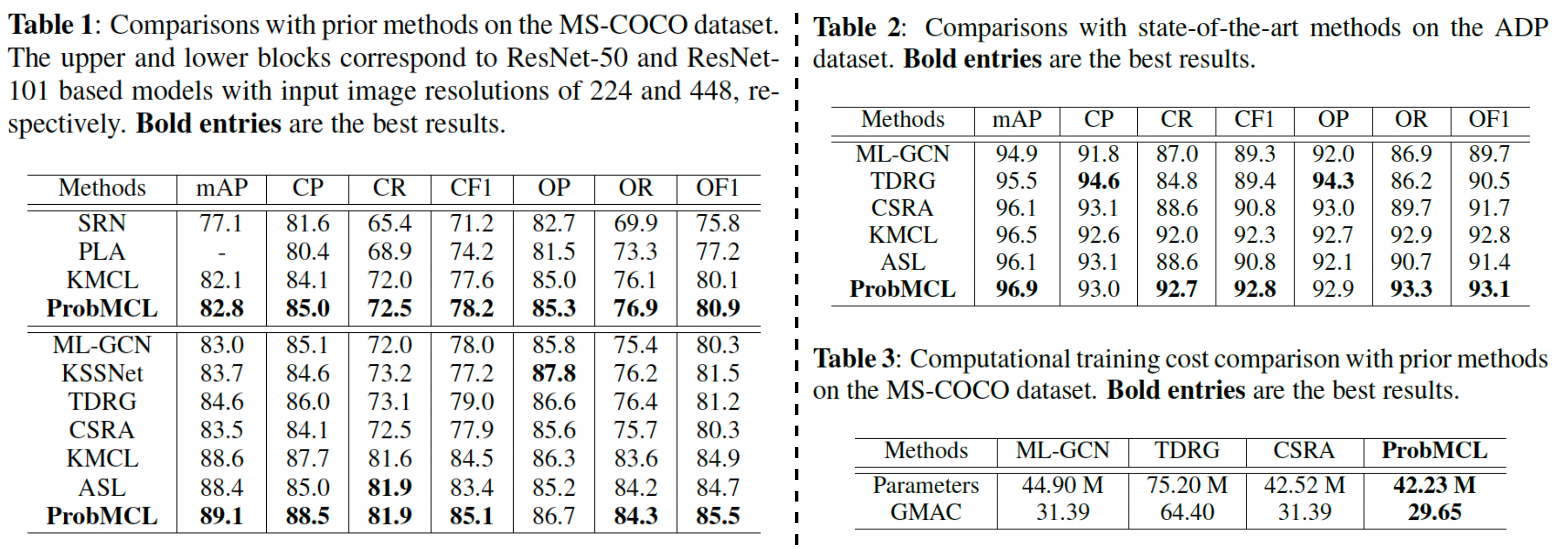

Tables 1 and 2 reveal that ProbMCL demonstrates superior performance compared to the majority of preceding multi-labeling techniques, across various metrics, for both the Computer Vision (MS-COCO) and Medical Imaging (ADP) datasets. Notably, our approach exhibits a diminished computational footprint in comparison to earlier methods employing resource-intensive modules, as illustrated in Table 3. These results underscore the advantages of ProbMCL, particularly in training scenarios with computational constraints.

@article{sajedi2024probmcl,

title={ProbMCL: Simple Probabilistic Contrastive Learning for Multi-Label Visual Classification},

author={Sajedi, Ahmad and Khaki, Samir and Lawryshyn, Yuri A. and Plataniotis, Konstantinos N.},

journal={ICASSP 2024 - 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year={2024}

}